This is Part 1 of a three-part series. Read Part 2 and Part 3 to get the full blueprint for T&E success.

The Viral Truth: When AI Disasters Go Public, What's Really at Stake?

The promise of Artificial Intelligence (AI) is transformative, offering unprecedented competitive advantages. It's why 42% of CIOs identify AI and Machine Learning (ML) as their top technology priority for 2025. Yet, for every success story, there are spectacular, public failures that capture the world's attention, serving as a harsh reminder that mistakes driven by AI can be irreversible.

These highly visible disasters, however, represent only the tip of a massive "failure iceberg". Consider the recent turmoil faced by two global giants, McDonald's and Air Canada, after trusting critical customer interactions to autonomous AI systems.

McDonald's Drive-Thru Blunder: After working with IBM for three years, McDonald's ended its AI drive-thru ordering partnership in June 2024. The project failed after a slew of social media videos showed confused customers, with one viral video capturing the AI system repeatedly adding Chicken McNuggets until the order reached a staggering 260. This spectacular failure demonstrates the risk of giving AI unconstrained autonomy and allowing a system to process orders without basic sanity checks or common-sense limits.

Air Canada’s Legal Nightmare: In February 2024, Air Canada was ordered to pay damages after its virtual assistant gave a passenger, Jake Moffatt, incorrect information about bereavement fares. When the airline denied the refund claim, it argued that it should not be held liable, suggesting the chatbot was a "separate legal entity". The tribunal denied this argument, ordering the airline to pay damages (CA$812.02) because the company failed to take "reasonable care to ensure its chatbot was accurate". This outcome established a critical legal precedent: companies are liable for their AI’s promises and cannot hide behind autonomous AI decisions.

These incidents make headlines, but the true financial peril of AI lies hidden beneath the surface.

The Staggering Scale of Enterprise AI Failure: Unpacking the AI Paradox

Despite historic investment, a deeply troubling reality persists, known as the Enterprise AI Paradox: massive capital allocation meets underwhelming returns. The data paints a stark picture of widespread failure and abandonment.

- Widespread Failure Rate: AI projects fail at a rate of 70% to 85%. This is approximately twice the already-high failure rate of traditional non-AI IT projects.

- Zero ROI from Pilots: An MIT study found that an astonishing 95% of enterprise generative AI pilots deliver zero measurable return on investment (ROI). This is a chilling statistic for leaders investing in new Travel & Expense Reporting initiatives.

- Surging Abandonment: The rate of projects being discarded before they reach production is accelerating dramatically. S&P Global reported that 42% of companies are now abandoning the majority of their AI initiatives, a sharp surge from just 17% the previous year.

These aborted projects represent billions of dollars in lost resources, creating massive "implementation debt" on corporate balance sheets. Crucially, the worst financial failures happen quietly before launch, resulting in abandonment, rather than the rare, public production disasters.

The Core Misconception: The Human and Data Roots of Technical Failures

The widespread nature of this failure points to a deeper, systemic issue. While conventional thinking might attribute the high failure rate to immature technology, analysis indicates that the most frequent and impactful cause is leadership-driven failures.

The truth is that AI projects fail at such a high rate because the problems are often rooted in strategic, process, and people-related issues. Technical issues like "poor data quality" are frequently mere symptoms of more fundamental human and organizational dysfunctions. For example, in Travel & Expense Reporting, data collected for compliance often lacks the context or granularity needed for effective AI model training. The problem isn't the technology itself, but the human element: a pervasive lack of organizational AI literacy and a crisis of confidence within the workforce.

This reframing is critical: the technical problems are the fever, but the underlying infection is a systemic lack of organizational AI literacy. The challenge is not technological maturity, but a profound lack of organizational readiness.

The PredictX Difference: A New Approach to T&E Success

At PredictX, we understand that a successful Travel & Expense (T&E) program requires more than just a new tool; it demands a fundamental shift in how you use your data. Our platform is purpose-built to address the root causes of AI failure, moving you beyond the paradox to a place of proactive, data-driven power.

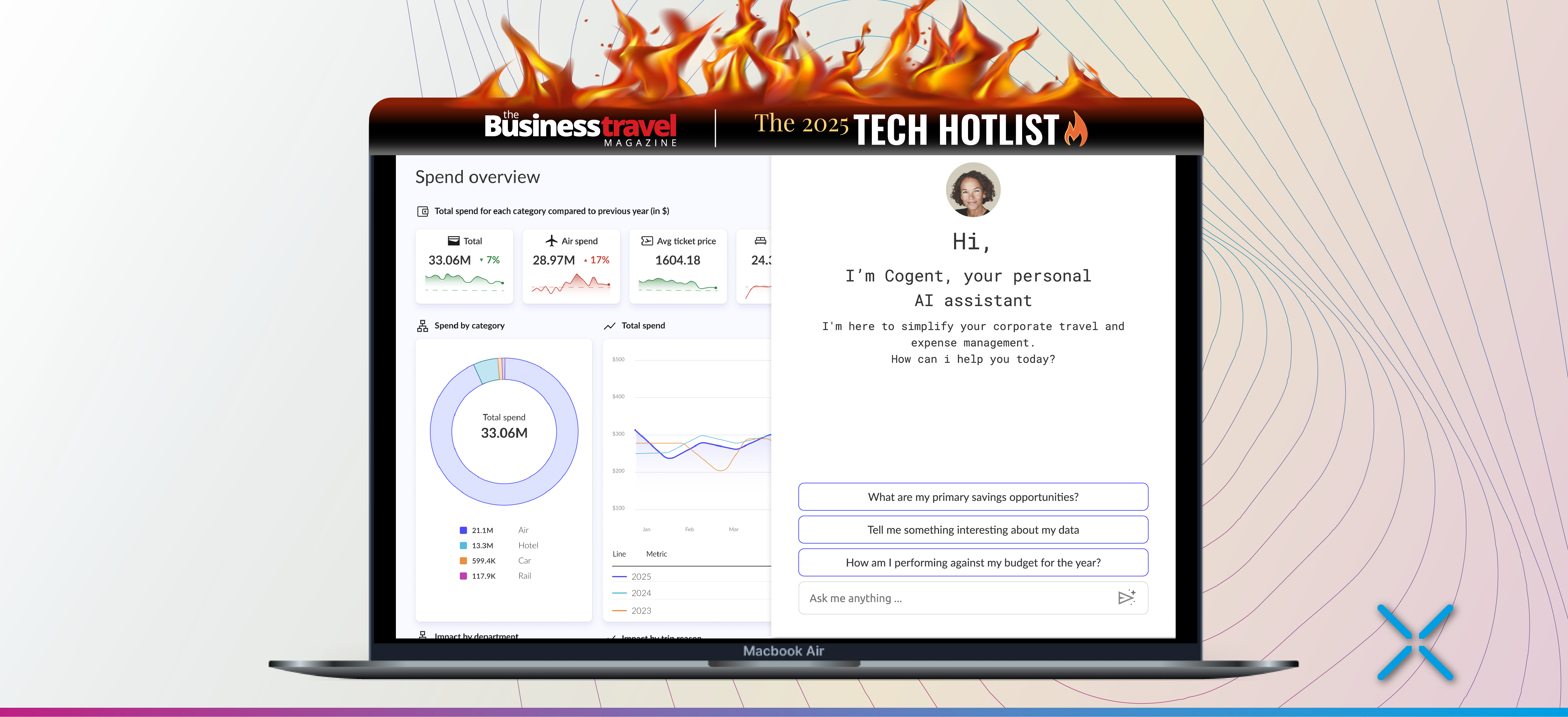

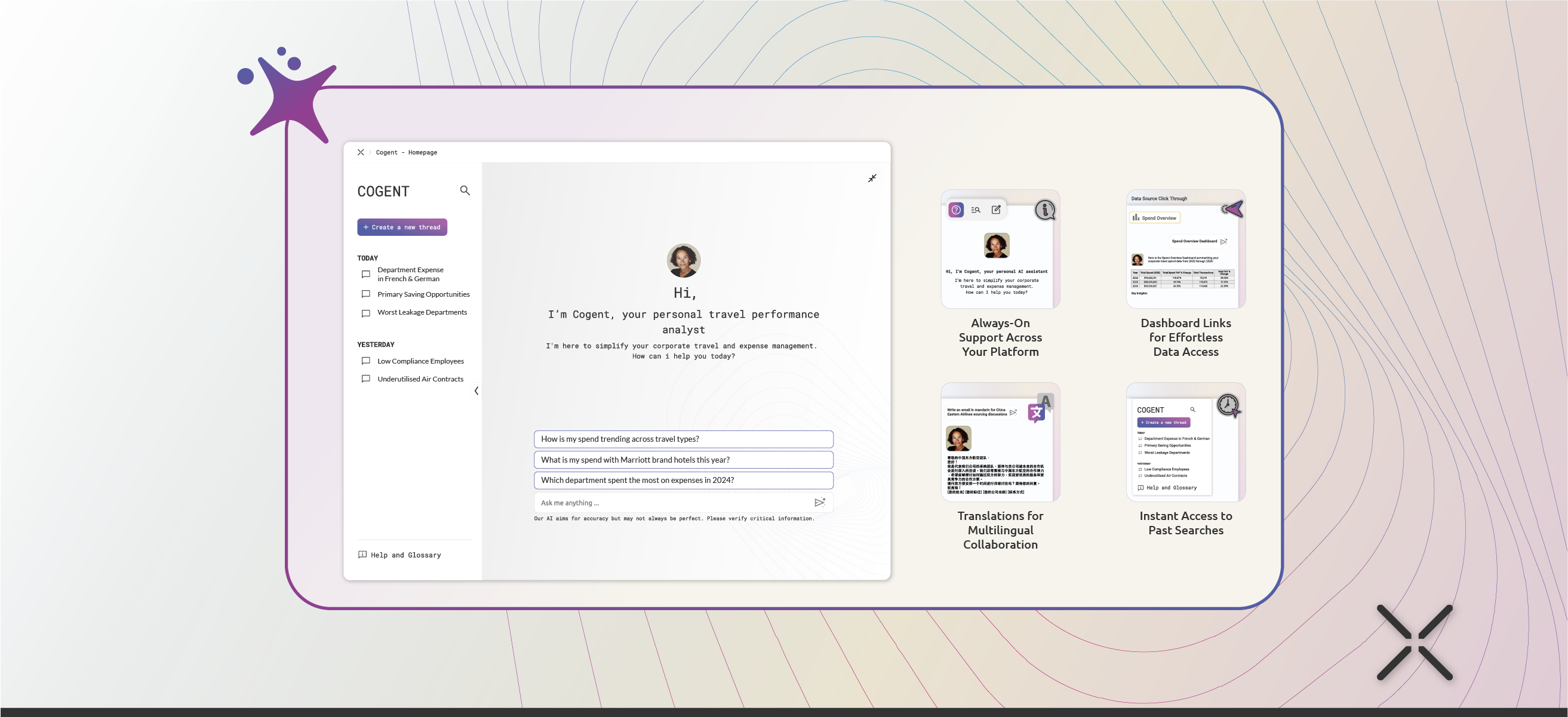

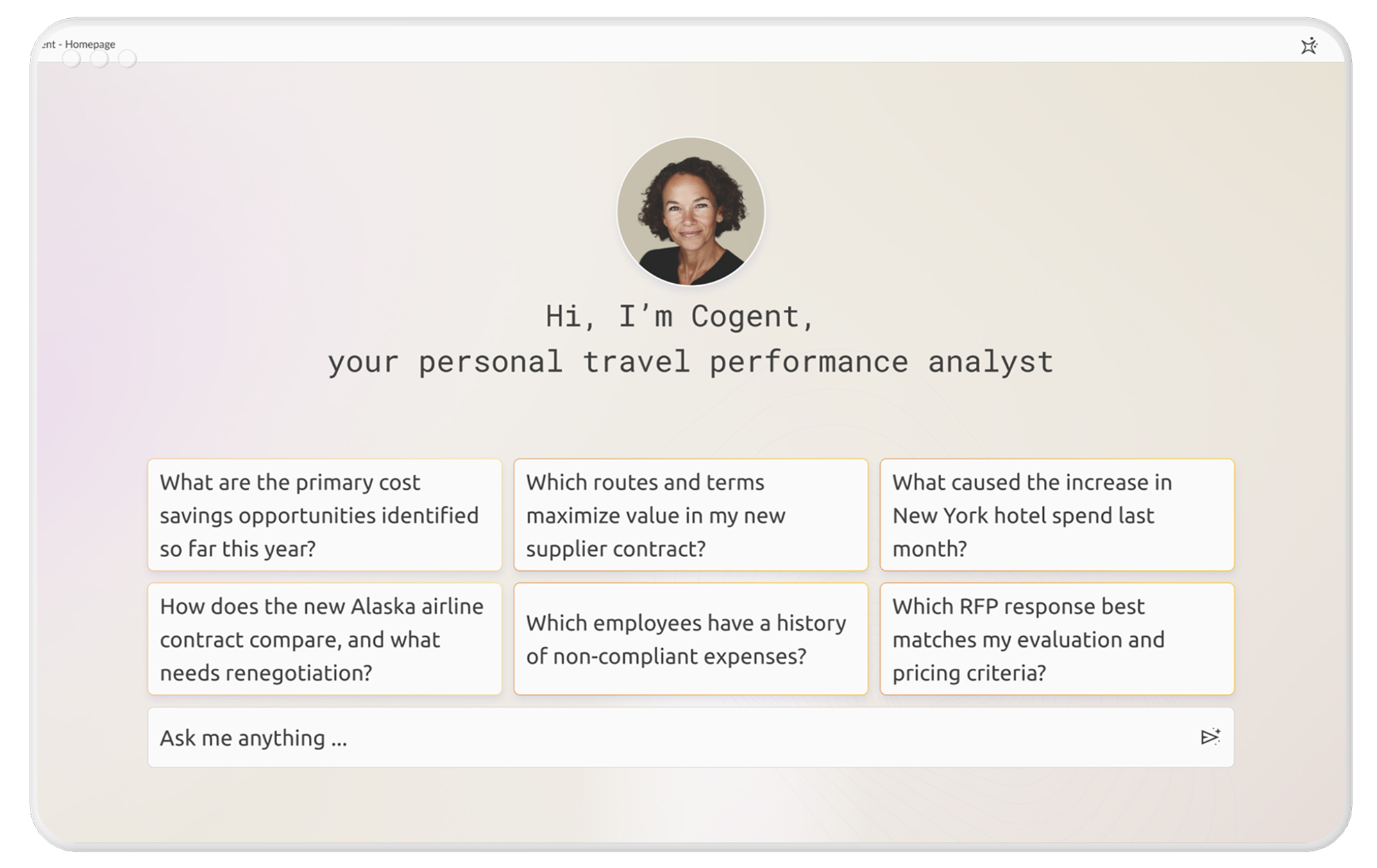

The PredictX platform provides the high-level framework that makes AI work. It solves the foundational data problem by ingesting data from over 200 connectors (TMCs, expense systems, credit cards) to create a single consolidated data source with 99.9% accuracy. This eliminates data fragmentation and ensures your AI is built on a solid, reliable foundation. Cogent Agentic AI, our award-winning workspace, is the powerful tool built specifically to use this clean data, providing intelligent, contextual answers to complex T&E challenges that generic chatbots or static dashboards could never deliver.

This is Part 1 of our journey. In Part 2, we will dive deeper into the specific strategic and data-centric flaws that derail most projects and how an Agentic AI solution can help you overcome them.

Related Posts

Part 2: Beyond the Code: The Strategic and Data Flaws Ensuring Your AI Project Fails

Part 3: The Agentic AI Revolution: How to Join the 5% Who Succeed by Investing in People

.webp)

Beyond Dashboards: Cogent - The Agentic AI Revolution in T&E Reporting & Expense Audit

.webp)

Cogent Wins 2025 BTS Europe Innovation Faceoff: Setting a New Benchmark for AI-Driven Travel & Expense (T&E) Solutions

How Cogent’s Agentic AI Revolutionizes T&E Reporting with RAG

Product Sheet | Cogent - AI-Powered Travel and Expense (T&E) Reporting

Prompt Engineering for T&E: Mastering Cogent Agentic AI to Drive Savings

Transform Travel & Expense (T&E) Management with Cogent’s Agentic AI Solutions

The Ultimate Guide: What to Ask Your AI for Smarter T&E Reporting | Cogent Agentic AI

The Future of T&E Reporting: A Conversation with Our CEO and BTN Group | Cogent Agentic AI

Hot List, Hot News: We've Been Named to the Tech Hotlist for Redefining Corporate Travel with Cogent Agentic AI

The T&E Manager of Tomorrow: How Cogent Agentic AI is Your Shortcut to Strategic Leadership in Corporate Travel

6 Powerful Cogent Use Cases for Travel & Expense (T&E) Reporting, Travel Data & Predictive Analytics with Agentic AI

Mastering the Future of Corporate Travel with Cogent Agentic AI: An Exclusive Interview with PredictX CEO Keesup Choe